So, I’ve been actually quite busy this last month with different forms of online teaching and only occasionally had inspiration to continue with audio/visual experiments as part of the Saundaryalahari project. The creative spirit sometimes needs to collect itself after a time of innovation - also to harvest new ideas to take things to the next step. Mostly, I was just busy and a bit emotionally exhausted with all the tumult in the world bearing down…

Almost spontaneously, I’ve begun new processes with a new tool in my arsenal: a Gieskes 3TrinsRGB+1c video synthesizer. The 3Trins is a very creative approach on analogue video synthesis, and - perhaps on its surface - it might seem like another tool for creation of supportive visuals to accompany music. I was, however, mostly curious about this device because of its abilities to accept external control via audio and/or cv voltages and that it contained an audio output that resolves the video oscillators into sound - something that can be processed and/or recirculated back into the video generation, and thus offers potentially new perspectives on the reciprocal processes I am exploring:

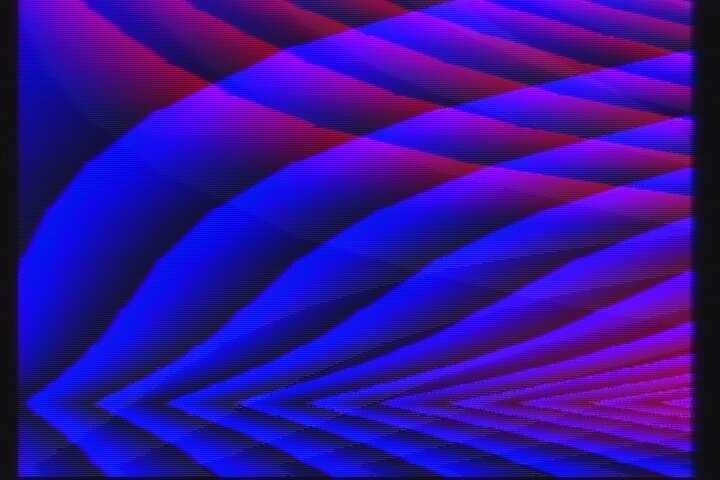

The differences between the 3Trins and Pixivisor will largely be a more effective control toward the generation of visuals. The first experiments with the 3Trins largely have to do with getting accustomed to this kind of visual control - and it is intreuging. On the first full day of experimentation, I was able to improvise this short film. The inputs were largely occupied by LFOs created by the Mutable Instruments Peaks - which, highly cycled enough, allow for the generation of triangle and square shapes from their waveforms. One additional oscillator was added to the audio output - otherwise it was entirely generated from a delayed output signal produced by the 3Trins.

Aside from aspects of sync and colour balance - also aspects that Pixivisor didn’t actively allow control, the inputs to each of the colour channels allow creative control by inputing VCOs. This is quite obvious, because VCOs can usually generate much higher frequencies to create layered visual effects on each channel. Furthermore, each channel can also be routed to one of the two internal LFOs and oscillate a little bit. You can create stacked oscillator scenarios.

One traditional way of creating video synthesis is to use a video camera and have the camera “feedback” the visual image created in real-time on a screen. This creates the familiar '“mirror-image” effect that we know from those carnival fun-houses, but it also is a significant method of adding to a reciprocal process. The image is fed back unto itself constantly, so that it generates visual patterns. By moving the camera a little in each direction, the oscillation mimics that of the width of a LFO/VCO fed into the colour channel. Actually, it is the most linear way of creating reciprocal processes - but the difference may be that modification of that input process - the “artistic” freedom element - is a bit trickier. I’ll keep exploring that though, and may use Pixivisor to supplement these processes on a different visual channel. The risk of that is probably that it will quickly become much too complicated. More to come…